AI in English Pronunciation: Integrating Theory, Tools, and Real-World Applications

Background

English has solidified its role as a primary language for international communication, leading to a vast population of non-native speakers striving for proficiency. However, the path to acquiring clear and accurate pronunciation is fraught with challenges.

Limitations of Instructor-Dependent Learning

Traditional pronunciation instruction heavily relies on teachers providing oral feedback. This model is inherently constrained by high costs, large class sizes, and limited classroom time, resulting in insufficient opportunities for individual practice and corrective feedback for each learner.

The Rise of Accessible Technology

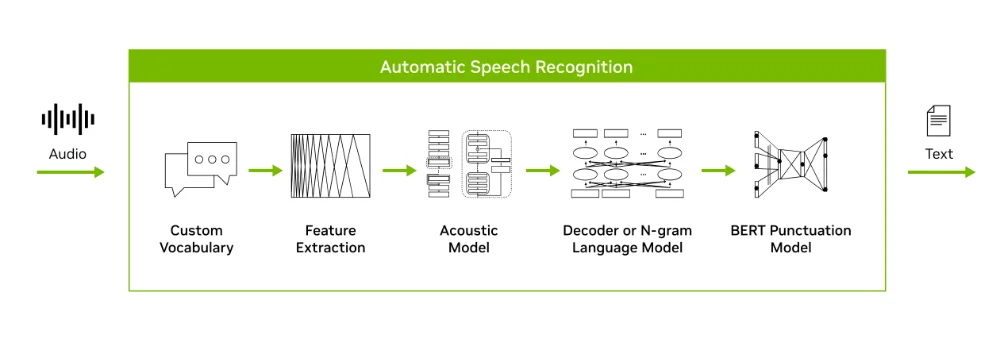

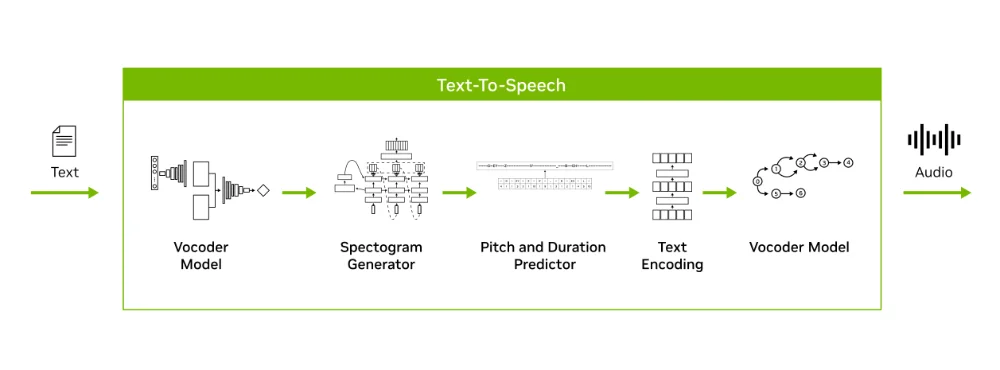

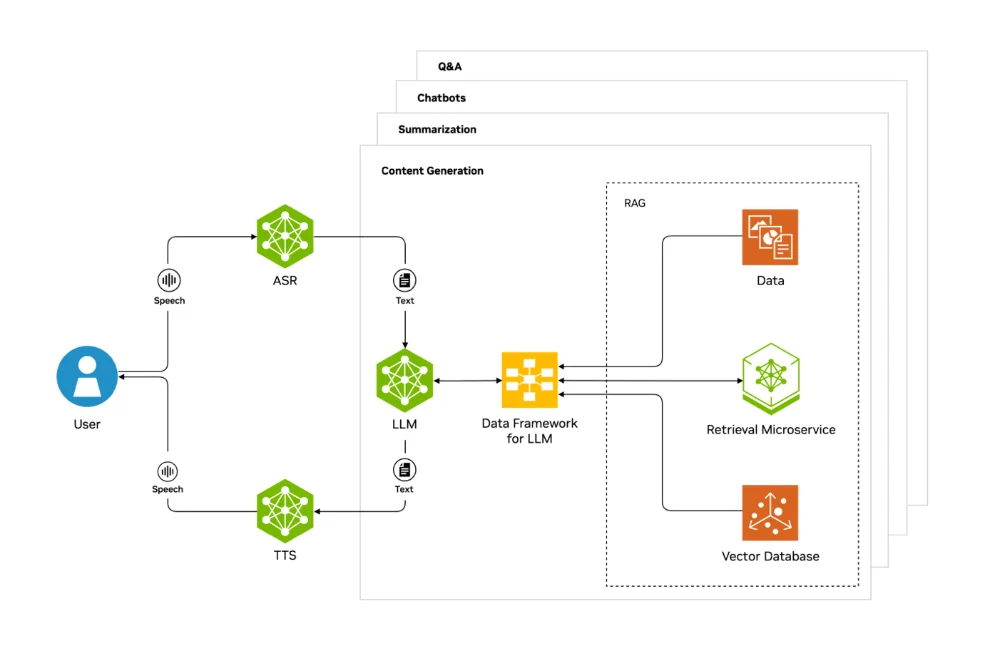

Advances in artificial intelligence are fundamentally shifting this paradigm. Core technologies such as highly accurate automated speech recognition (ASR), natural-sounding text-to-speech (TTS) systems, and responsive AI chatbots are now converging to create powerful, personalized learning tools. These technologies enable learners to practice and receive immediate feedback on their pronunciation anytime and anywhere, breaking free from the traditional constraints of the physical classroom.

Theoretical Foundations Supporting AI in Pronunciation Training

1. Skill Acquisition Theory

This theory posits that learning a complex skill like pronunciation requires a transition from slow, declarative knowledge to fast, automatic procedural knowledge.

AI Application

- Provides the massive amounts of repetitive, deliberate practice necessary for building articulatory muscle memory.

- Offers instantaneous feedback on performance, allowing for real-time correction and refinement.

- Enables focused practice on specific, isolated phonemes or prosodic features to accelerate the path to automatization.

2. The Interaction Hypothesis

Long (1996) argued that language acquisition is most facilitated when communication breakdowns lead to “negotiation for meaning,” pushing learners to adjust their output.

AI Application

- AI chatbots and tutors create contexts for meaningful, goal-oriented interaction.

- Misunderstandings by the AI’s speech recognition trigger negotiation sequences, forcing learners to clarify and modify their pronunciation.

- Provides a safe environment for this type of interactional modification without the time pressure of a live conversation.

3. Sociocultural Theory & Scaffolding

Vygotsky’s theory emphasizes learning through social interaction and guided support within the Zone of Proximal Development (ZPD).

AI Application

- The AI acts as a More Knowledgeable Other (MKO), providing scaffolded feedback tailored to the learner’s current level.

- It can adjust task difficulty and hints to guide the learner through their ZPD, offering support that is gradually withdrawn as competence increases.

- Serves as a patient, always-available practice partner that mediates the learning process.

4. Self-Determination Theory (SDT)

SDT posits that intrinsic motivation is fueled by satisfying three basic psychological needs: autonomy, competence, and relatedness.

AI Application

- Autonomy: Learners have choice and control over their practice sessions.

- Competence: Clear progress tracking and immediate feedback foster a sense of mastery and achievement.

- Relatedness: While limited, gamified elements and interactions with a virtual tutor can simulate a sense of connection, supporting motivation.

Summary

Collectively, these six theoretical frameworks provide a comprehensive and multi-faceted justification for the use of AI in pronunciation training. Skill Acquisition Theory and Automaticity Theory explain the mechanism of learning, highlighting how AI facilitates the deliberate practice necessary for developing fluent, automatic performance. The Interaction Hypothesis and Sociocultural Theory elucidate the social and interactive dimensions, framing the AI as a conversational partner and a source of scaffolded support that guides learners through their developmental journey. Finally, The Affective Filter Hypothesis and Self-Determination Theory address the critical psychological and motivational factors, demonstrating how AI creates a low-anxiety environment that fosters learner autonomy, builds confidence, and sustains engagement.

In conclusion, AI-powered tools are not merely technological gadgets; they are versatile platforms capable of operationalizing a wide spectrum of established pedagogical principles. When designed and implemented thoughtfully, they can create a holistic learning ecosystem that effectively addresses the cognitive, social, and affective needs of language learners, making them a powerful ally in the quest for pronunciation proficiency.

The current situation of AI in English pronunciation learning

Primary Application Areas for AI in Pronunciation Improvement

Research indicates that pronunciation accuracy and spoken fluency represent the most prevalent domains for AI tool applications. Through speech recognition systems, chatbots, and mobile applications such as ELSA Speak and Duolingo, learners gain access to real-time, personalized pronunciation feedback. Furthermore, AI technology plays a significant role in enhancing learner autonomy, reducing language anxiety, and boosting learning motivation. Some tools are beginning to integrate interactive scenarios to provide pronunciation training with greater communicative authenticity.

Primary User Base of AI Pronunciation Tools

The primary users of AI pronunciation tools are typically beginner and intermediate English learners, with widespread adoption among non-native English speakers in higher education and professional settings. This reflects the current focus of AI pronunciation assistance on serving learners at intermediate levels and below, where its applicability for foundational and general pronunciation training has been well-validated. In contrast, tools for advanced learners or specialized professional contexts requiring deeper pronunciation training remain in the exploratory phase.

AI Type: Precision Diagnosis and Error Correction Category and Immersive Scenario Interaction Category

AI-assisted English pronunciation learning can be categorized based on its core functional positioning and learning focus:

🎯 Precision Diagnosis and Error Correction AI

Focus: “Technology-driven pinpointing of pronunciation issues + targeted correction”

Learning Goal: Enhancing pronunciation accuracy

Approach:

Employs specialized techniques to dissect pronunciation details

Provides quantifiable and traceable improvement plans

Addresses traditional learning pain points:

“Not knowing where one is going wrong”

“Not knowing how to correct it”

Example: ELSA Speak 👉 Official website: https://elsaspeak.com/

Key Features:

Pinpoints pronunciation errors through phoneme-level analysis

Delivers real-time demonstration videos of mouth shapes

Helps learners correct issues such as phonetic symbols and stress placement

🌍 Immersive Scenario-based Interactive AI

Focus: “Simulating authentic language environments + dynamic interactive guidance”

Learning Goal: Practical pronunciation application skills

Approach:

Uses scenario-based dialogues and gamified design

Reduces anxiety about speaking

Enables learners to “practice pronunciation through usage”

Addresses traditional learning pain points:

“Correct pronunciation but inability to apply it”

“Reluctance to speak”

Related tools: To be introduced below

The Educational Benefits of AI for English Pronunciation Learning

Instant and Precise Feedback

immediate corrective feedback not usually available in classrooms.

Safe, Stress-Free Practice Environment

adaptive content tailored to individual weaknesses.

Service Three

allows risk-free speaking practice anytime.

Enhanced Motivation and Self-Direction

gamification and progress tracking support engagement.

Accessibility and Inclusivity

reduces barriers of time, place and teacher availability.

How to Use ELSA AI

1) Dedicated Pronunciation Platforms

ELSA Speak and Speak — designed to provide targeted, real-time feedback on pronunciation, intonation and fluency. ELSA uses phoneme-level diagnosis and mouth-shape demonstrations; Speak functions as an AI conversational tutor for sentence-level practice.

Video demo: ELSA Speak demo

2) AI Conversational Partners

Large language models and voice assistants (e.g., ChatGPT with voice, Google Assistant) can sustain open-ended dialogues, adjust speaking speed and complexity, and simulate scenarios such as interviews. Video demo: ChatGPT / conversational AI demo

3) AI-Integrated Speech Recognition Systems

Platforms such as Microsoft Azure Speech and Google’s Speech-to-Text power apps with real-time transcription, allowing learners to compare their spoken output to transcription results and self-monitor pronunciation.

4) Other Tools

Duolingo, Babbel, Rosetta Stone, BoldVoice, Speechling

Examples of AI Applications in Teaching

📌 Case Study 1: “Speechling” for EFL Learners in Thailand

Context To address the challenges faced by English as a Foreign Language (EFL) learners—such as limited access to high-quality learning tools and insufficient technical expertise among instructors—which hinder effective pronunciation and speaking practice, researchers tested an artificial intelligence speech recognition technology (AI-SRT) program called Speechling.

Design

Focuses on personalized pronunciation and speaking practice

Offers real-person voice samples, instant feedback, spaced repetition algorithms, and flexible learning opportunities

Provides a basis for designing pre- and post-test tools, guiding teachers in aligning practice tasks with intermediate EFL course requirements

Utilizes speech-to-text algorithms to convert speech into text, analyze errors, and deliver targeted feedback

Serves as an auxiliary tool to enhance curriculum-based instruction, boost learner engagement, and improve pronunciation accuracy, speaking fluency, and confidence

Findings of Dennis (2024)

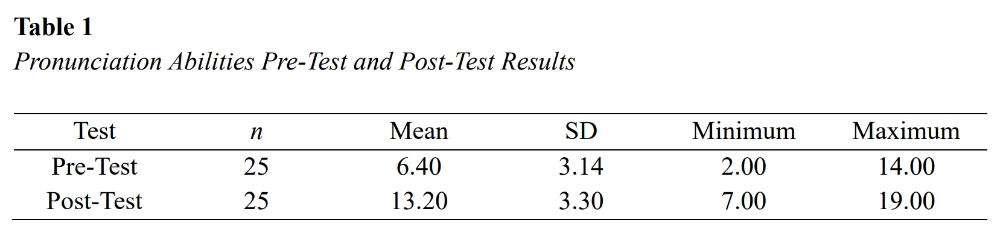

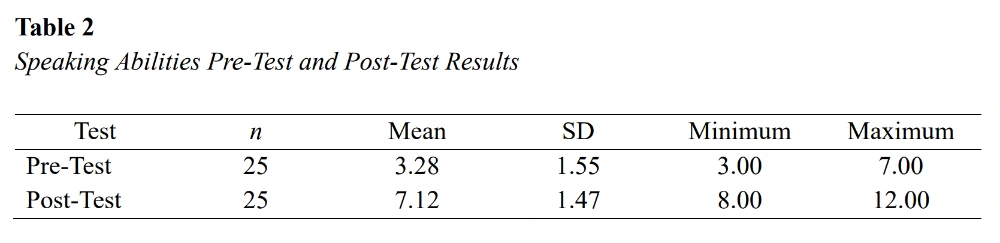

RQ1: Effectiveness of AI-SRT program

Pronunciation Skills

Pre-test mean: 6.40

Post-test mean: 13.20

Statistical significance: p < .05

t-value: 7.46

Speaking Skills

Pre-test mean: 3.28

Post-test mean: 7.12

Statistical significance: p < .05

t-value: 13.11

➡️ Results indicate substantial positive impact on pronunciation accuracy and speaking proficiency.

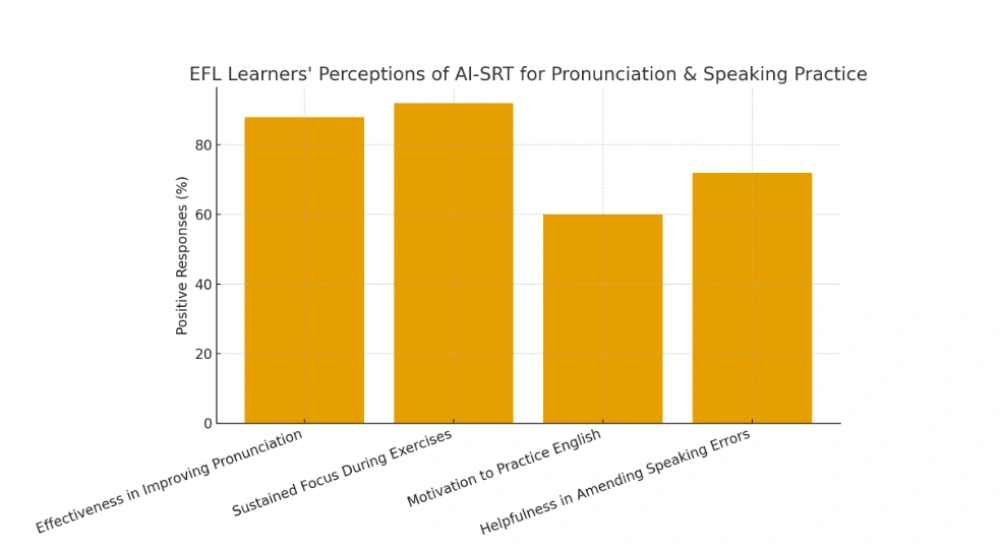

RQ2: Learners’ perceptions and responses Survey (5-point Likert scale + 1 open-ended question) showed highly positive perceptions:

Effectiveness in improving pronunciation: 88% (strongly agree)

Sustained focus during exercises: 92%

Motivation to practice English: 60%

Helpfulness in amending speaking errors: 72%

Key Results

Flexibility (practice anytime/anywhere) and user-friendly interface valued

Immediate, precise feedback and rich vocabulary content highlighted

Adaptive difficulty levels, diverse accents, and authentic scenarios enhanced engagement

Boosted confidence, enjoyment, and accessibility

Overall Summary AI speech recognition technology significantly improves pronunciation accuracy and speaking fluency. Learners responded positively to diverse practice formats, flexible settings, and real-time error correction. Effective especially in contexts lacking native-speaking environments.

Reference Dennis, N. K. (2024). Using AI-powered speech recognition technology to improve English pronunciation and speaking skills. IAFOR Journal of Education: Technology in Education, 12(2), 107–126. ERIC record

📌 Case Study 2: AI in Pronunciation Teaching – EFL Teachers in Cyprus

Context To address the long-term marginalization of pronunciation instruction in foreign language teaching and explore how AI can optimize this field, researchers surveyed 117 in-service EFL teachers in Cyprus to investigate their use of AI for pronunciation teaching and related beliefs.

Design

Online survey collected data on:

Demographic/professional background (age, experience, education, teaching level, school sector, training)

Frequency of AI use (Likert scale 1–7)

Beliefs about AI (effectiveness, drawbacks, willingness to integrate, Likert scale 1–7)

Statistical analyses: ordinal regression, k-means clustering

Findings of Georgiou (2025)

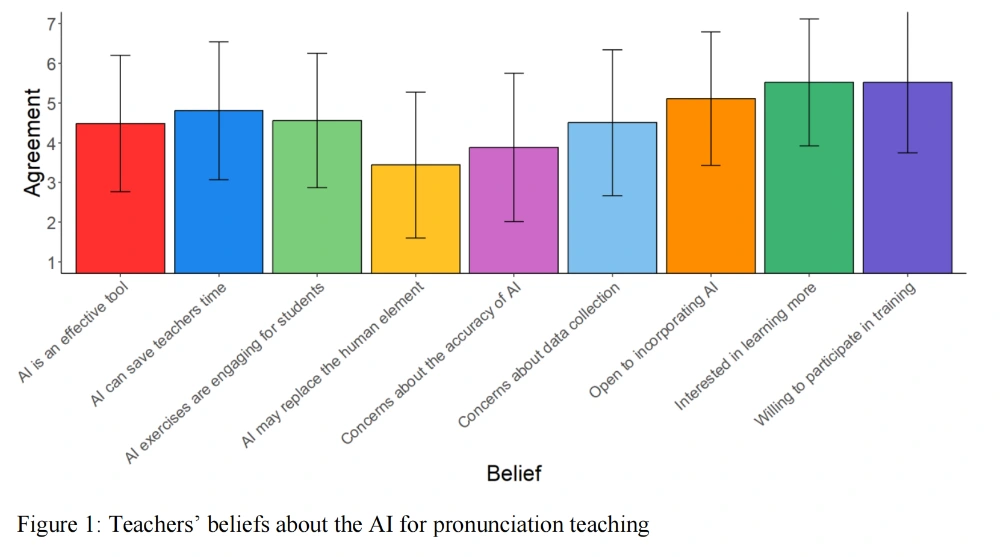

RQ1: Teachers’ agreement on AI effectiveness, drawbacks, and willingness

Perceived effectiveness: Recognized AI as effective, time-saving, and engaging

Willingness to integrate: Most teachers open to adoption, training, and further learning

Perceived drawbacks: Concerns relatively low; main worries were data collection, accuracy, and human replacement

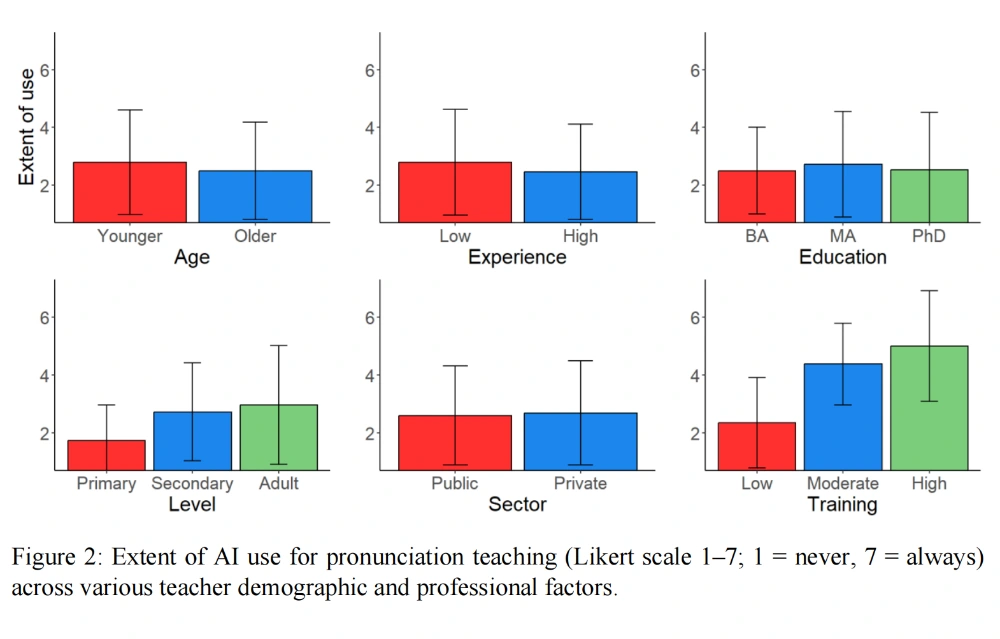

RQ2: Variation in AI use based on demographics/profession

Teaching level: Secondary/adult teachers used AI more than primary (p=0.03; p=0.04)

Training: Moderate/extensive training linked to significantly higher AI use (p<0.001)

No differences: Age, experience, education level, school sector

RQ3: Beliefs variation based on demographics/profession/AI use

AI use: Frequent users held stronger positive beliefs (p<0.001)

Training: Extensive training reduced concerns about human replacement (p=0.01) and data privacy (p=0.02)

Education: MA/PhD holders perceived AI as more effective (p=0.05) and had fewer privacy concerns (p=0.02; p=0.05)

Experience/Age: More experienced/older teachers agreed AI exercises are engaging (p=0.02; p=0.05)

Overall Summary AI has strong potential to revitalize pronunciation instruction. Teachers hold positive attitudes toward effectiveness and integration. Key drivers: teaching level and training. Beliefs shaped by AI use frequency, training, and education. Highlights need for tailored training programs addressing technical skills and ethical issues (e.g., data privacy).

Opportunities and Challenges

Opportunities: Real-time feedback, phoneme-level corrections, diverse scenarios, adaptive teaching support

Challenges: Lack of AI training, data privacy risks, low acceptance among some educators/learners, AI biases, inability to replicate emotional/cultural transmission

References

AI in Language Education, 1(2), 18–29. DOI link

Georgiou, G. P. (2025). Artificial Intelligence in Pronunciation Teaching: Use and Beliefs of Foreign Language Teachers. arXiv preprint arXiv:2503.04128.

The Road Ahead: Future Trends, Limitations and Good Practice When Using AI for Speaking

Future Trends

AI for speaking will become more personalized and multimodal, integrating audio, text and mouth-shape/video guidance, and becoming more accent-aware. Integration with mobile learning and LMS platforms will facilitate frequent micro-practice (research references: Li & He, 2023; Sun, 2024; Rahimi, 2022).

Limitations

- AI cannot fully replace human feedback; accent or L1 biases exist in scoring (Kang & Park, 2021).

- Tools can over-correct small segmental errors while ignoring communicative success.

- Dependence on device quality and bandwidth; data privacy concerns when voice is stored or processed by third parties (Ahmad, 2025).

Good Practice

- Use AI as a first listener and practice partner, but keep the teacher as the final assessor.

- Encourage focus on intelligibility, stress and rhythm rather than aiming solely for a native-like accent.

- Explain and negotiate AI feedback in class so learners do not follow it blindly.

- Provide low-tech or offline alternatives for learners with limited access.